Russell Coker: Weather and Boinc

I just wrote a Perl script to look at the Australian Bureau of Meteorology pages to find the current temperature in an area and then adjust BOINC settings accordingly. The Perl script (in this post after the break, which shouldn t be in the RSS feed) takes the URL of a Bureau of Meteorology observation point as ARGV[0] and parses that to find the current (within the last hour) temperature. Then successive command line arguments are of the form 24:100 and 30:50 which indicate that at below 24C 100% of CPU cores should be used and below 30C 50% of CPU cores should be used. In warm weather having a couple of workstations in a room running BOINC (or any other CPU intensive task) will increase the temperature and also make excessive noise from cooling fans.

To change the number of CPU cores used the script changes /etc/boinc-client/global_prefs_override.xml and then tells BOINC to reload that config file. This code is a little ugly (it doesn t properly parse XML, it just replaces a line of text) and could fail on a valid configuration file that wasn t produced by the current BOINC code.

The parsing of the BoM page is a little ugly too, it relies on the HTML code in the BoM page they could make a page that looks identical which breaks the parsing or even a page that contains the same data that looks different. It would be nice if the BoM published some APIs for getting the weather. One thing that would be good is TXT records in the DNS. DNS supports caching with specified lifetime and is designed for high throughput in aggregate. If you had a million IOT devices polling the current temperature and forecasts every minute via DNS the people running the servers wouldn t even notice the load, while a million devices polling a web based API would be a significant load. As an aside I recommend playing nice and only running such a script every 30 minutes, the BoM page seems to be updated on the half hour so I have my cron jobs running at 5 and 35 minutes past the hour.

If this code works for you then that s great. If it merely acts as an inspiration for developing your own code then that s great too! BOINC users outside Australia could replace the code for getting meteorological data (or even interface to a digital thermometer). Australians who use other CPU intensive batch jobs could take the BoM parsing code and replace the BOINC related code. If you write scripts inspired by this please blog about it and comment here with a link to your blog post.

#!/usr/bin/perl

use strict;

use Sys::Syslog;

# St Kilda Harbour RMYS

# http://www.bom.gov.au/products/IDV60901/IDV60901.95864.shtml

my $URL = $ARGV[0];

open(IN, "wget -o /dev/null -O - $URL ") or die "Can't get $URL";

while(<IN>)

if($_ =~ /tr class=.rowleftcolumn/)

last;

sub get_data

if(not $_[0] =~ /headers=.t1-$_[1]/)

return undef;

$_[0] =~ s/^.*headers=.t1-$_[1]..//;

$_[0] =~ s/<.td.*$//;

return $_[0];

my @datetime;

my $cur_temp -100;

while(<IN>)

chomp;

if($_ =~ /^<.tr>$/)

last;

my $res;

if($res = get_data($_, "datetime"))

@datetime = split(/\//, $res)

elsif($res = get_data($_, "tmp"))

$cur_temp = $res;

close(IN);

if($#datetime != 1 or $cur_temp == -100)

die "Can't parse BOM data";

my ($sec,$min,$hour,$mday,$mon,$year,$wday,$yday,$isdst) = localtime();

if($mday - $datetime[0] > 1 or ($datetime[0] > $mday and $mday != 1))

die "Date wrong\n";

my $mins;

my @timearr = split(/:/, $datetime[1]);

$mins = $timearr[0] * 60 + $timearr [1];

if($timearr[1] =~ /pm/)

$mins += 720;

if($mday != $datetime[0])

$mins += 1440;

if($mins + 60 < $hour * 60 + $min)

die "page outdated\n";

my %temp_hash;

foreach ( @ARGV[1..$#ARGV] )

my @tmparr = split(/:/, $_);

$temp_hash $tmparr[0] = $tmparr[1];

my @temp_list = sort(keys(%temp_hash));

my $percent = 0;

my $i;

for($i = $#temp_list; $i >= 0 and $temp_list[$i] > $cur_temp; $i--)

$percent = $temp_hash $temp_list[$i]

my $prefs = "/etc/boinc-client/global_prefs_override.xml";

open(IN, "<$prefs") or die "Can't read $prefs";

my @prefs_contents;

while(<IN>)

push(@prefs_contents, $_);

close(IN);

openlog("boincmgr-cron", "", "daemon");

my @cpus_pct = grep(/max_ncpus_pct/, @prefs_contents);

my $cpus_line = $cpus_pct[0];

$cpus_line =~ s/..max_ncpus_pct.$//;

$cpus_line =~ s/^.*max_ncpus_pct.//;

if($cpus_line == $percent)

syslog("info", "Temp $cur_temp" . "C, already set to $percent");

exit 0;

open(OUT, ">$prefs.new") or die "Can't read $prefs.new";

for($i = 0; $i <= $#prefs_contents; $i++)

if($prefs_contents[$i] =~ /max_ncpus_pct/)

print OUT " <max_ncpus_pct>$percent.000000</max_ncpus_pct>\n";

else

print OUT $prefs_contents[$i];

close(OUT);

rename "$prefs.new", "$prefs" or die "can't rename";

system("boinccmd --read_global_prefs_override");

syslog("info", "Temp $cur_temp" . "C, set percentage to $percent");

Despite having worked on a

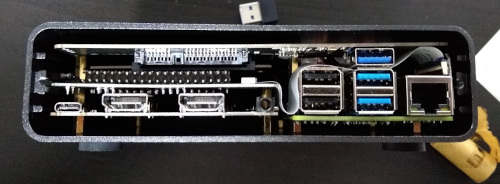

Despite having worked on a  I managed to break a USB port on the Desk Pi. It has a pair of forward facing ports, I plugged my wireless keyboard dongle into it and when trying to remove it the solid spacer bit in the socket broke off. I ve never had this happen to me before and I ve been using USB devices for 20 years, so I m putting the blame on a shoddy socket.

The first drive I tried was an old Crucial M500 mSATA device. I have an adaptor that makes it look like a normal 2.5 drive so I used that. Unfortunately it resulted in a boot loop; the Pi would boot its initial firmware, try to talk to the drive and then reboot before even loading Linux. The DeskPi Pro comes with an m2 adaptor and I had a spare m2 drive, so I tried that and it all worked fine. This might just be power issues, but it was an unfortunate experience especially after the USB port had broken off.

(Given I ended up using an M.2 drive another case option would have been the

I managed to break a USB port on the Desk Pi. It has a pair of forward facing ports, I plugged my wireless keyboard dongle into it and when trying to remove it the solid spacer bit in the socket broke off. I ve never had this happen to me before and I ve been using USB devices for 20 years, so I m putting the blame on a shoddy socket.

The first drive I tried was an old Crucial M500 mSATA device. I have an adaptor that makes it look like a normal 2.5 drive so I used that. Unfortunately it resulted in a boot loop; the Pi would boot its initial firmware, try to talk to the drive and then reboot before even loading Linux. The DeskPi Pro comes with an m2 adaptor and I had a spare m2 drive, so I tried that and it all worked fine. This might just be power issues, but it was an unfortunate experience especially after the USB port had broken off.

(Given I ended up using an M.2 drive another case option would have been the  The case is a little snug; I was worried I was going to damage things as I slid it in. Additionally the construction process is a little involved. There s a good set of instructions, but there are a lot of pieces and screws involved. This includes a couple of

The case is a little snug; I was worried I was going to damage things as I slid it in. Additionally the construction process is a little involved. There s a good set of instructions, but there are a lot of pieces and screws involved. This includes a couple of  I hate the need for an external USB3 dongle to bridge from the Pi to the USB/SATA adaptor. All the cases I ve seen with an internal drive bay have to do this, because the USB3 isn t brought out internally by the Pi, but it just looks ugly to me. It s hidden at the back, but meh.

Fan control is via a USB/serial device, which is fine, but it attaches to the USB C power port which defaults to being a USB peripheral. Raspbian based kernels support device tree overlays which allows easy reconfiguration to host mode, but for a Debian based system I ended up rolling my own dtb file. I changed

I hate the need for an external USB3 dongle to bridge from the Pi to the USB/SATA adaptor. All the cases I ve seen with an internal drive bay have to do this, because the USB3 isn t brought out internally by the Pi, but it just looks ugly to me. It s hidden at the back, but meh.

Fan control is via a USB/serial device, which is fine, but it attaches to the USB C power port which defaults to being a USB peripheral. Raspbian based kernels support device tree overlays which allows easy reconfiguration to host mode, but for a Debian based system I ended up rolling my own dtb file. I changed

Previously:

Previously:

In May, I got selected as a

In May, I got selected as a

Welcome to gambaru.de. Here is my monthly report that covers what I have been doing for Debian. If you re interested in Java, Games and LTS topics, this might be interesting for you.

Debian Games

Welcome to gambaru.de. Here is my monthly report that covers what I have been doing for Debian. If you re interested in Java, Games and LTS topics, this might be interesting for you.

Debian Games

Often, when I mention how things work in the interactive theorem prover [Isabelle/HOL] (in the following just Isabelle

Often, when I mention how things work in the interactive theorem prover [Isabelle/HOL] (in the following just Isabelle  long time no blog post. & the

long time no blog post. & the  If you have a bunch of machines running OpenBSD for firewalling purposes, which is pretty standard, you might start to use source-control to maintain the rulesets. You might go further, and use some kind of integration testing to deploy changes from your revision control system into production.

Of course before you deploy any

If you have a bunch of machines running OpenBSD for firewalling purposes, which is pretty standard, you might start to use source-control to maintain the rulesets. You might go further, and use some kind of integration testing to deploy changes from your revision control system into production.

Of course before you deploy any  Here is my monthly update covering what I have been doing in the free software world (

Here is my monthly update covering what I have been doing in the free software world ( A brown bag bug fix release 0.0.3 of

A brown bag bug fix release 0.0.3 of  Around 2 decades back and a bit more I was introduced to computers. The top-line was 386DX which was mainly used as fat servers and some lucky institutions had the 386SX where IF we were lucky we could be able to play some games on it. I was pretty bad at Prince of Persia or most of the games of the era as most of the games depended on lightning reflexes which I didn t possess. Then 1997 happened and I was introduced to GNU/Linux but my love of/for games still continued even though I was bad at most of them. The only saving grace was turn-based RPG s (role-playing games) which didn t have permadeath, so you could plan your next move. Sometimes a wrong decision would lead to getting a place from where it was impossible to move further. As the decision was taken far far break which lead to the tangent, the only recourse was to replay the game which eventually lead to giving most of those kind of games.

Then in/around 2000 Maxis came out with

Around 2 decades back and a bit more I was introduced to computers. The top-line was 386DX which was mainly used as fat servers and some lucky institutions had the 386SX where IF we were lucky we could be able to play some games on it. I was pretty bad at Prince of Persia or most of the games of the era as most of the games depended on lightning reflexes which I didn t possess. Then 1997 happened and I was introduced to GNU/Linux but my love of/for games still continued even though I was bad at most of them. The only saving grace was turn-based RPG s (role-playing games) which didn t have permadeath, so you could plan your next move. Sometimes a wrong decision would lead to getting a place from where it was impossible to move further. As the decision was taken far far break which lead to the tangent, the only recourse was to replay the game which eventually lead to giving most of those kind of games.

Then in/around 2000 Maxis came out with  [1] A very surprising side-effect of that commit was that the ( original ) auto-hinter could now solve a complicated haskell transition. Turns out that it works a lot better, when you give correct information!

[1] A very surprising side-effect of that commit was that the ( original ) auto-hinter could now solve a complicated haskell transition. Turns out that it works a lot better, when you give correct information!